VR Gesture Combo

| Company Academic Project |

Engine Unity |

||

| Platform Windows PC, Meta Quest 3 |

Skills C#, XR Hands |

Experiment

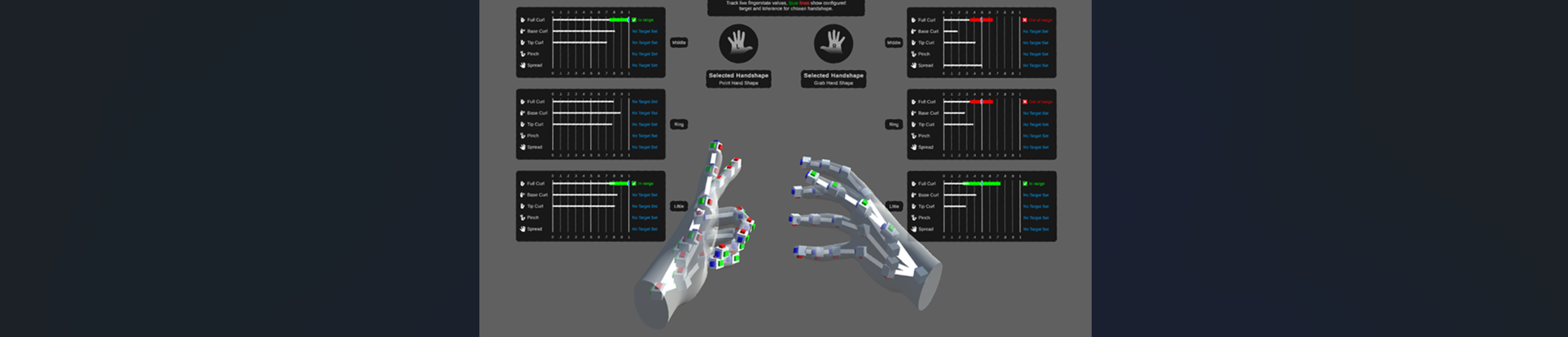

In VR technology, more apps are utilizing hand tracking as an input method instead of controllers. With the release of Unity’s API for setting custom gestures (XR Hands 1.4.0-pre.1), I conducted a simple test to see how it could be applied in a game.

To enhance gesture-based interactions, I implemented a customized combo system using the XR Hands API. In the video, the floating text boxes visualize the recognized combo sequences. When multiple gestures are detected, their corresponding texts appear in the same row, effectively displaying the executed combo in real time.

Rapid Gestures: Finger Gun

This test involves a repeated combination of two gestures. As shown in the video, there are instances where the gesture input is ignored. This is due to a slight delay in gesture recognition when using the API. Because of this issue, rapidly repeated gestures may not be suitable for gameplay applications.

Gestures in Sequence: Fireball

In this test, I focused on recognizing gestures more slowly and accurately. I configured the system to trigger a fireball when three specific gestures are performed in sequence. The results turned out quite well.

Complex-intersected Gestures: Hand Signs from Naruto

In the Naruto anime, characters perform intricate hand signs to cast magic-like techniques. These hand signs require both hands to move in sync, frequently intertwining in a rapid sequence of 3 to 6 steps.

However, when testing this with the XR Hands API and Meta Quest 3, I encountered a major limitation—the system struggled to recognize each hand when they intersected. Due to this issue, it was completely unable to track or register the gestures correctly.